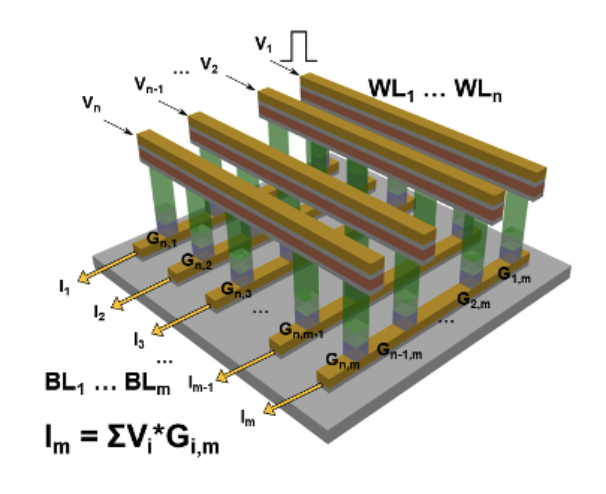

As the amount of data for computing exponentially increases, data transfer between memory and processor turns into a major bottleneck dominating the system-level energy consumption for advanced machine learning applications. As an example, Training GPT-3 requires over 1000 megawatts-hours, equivalent to power consumed by ~700,000 homes for a day. In-memory computing has been proposed to circumvent this bottleneck, which arises from von Neumann architecture, by minimizing or eliminating the energy-consuming data transfer between memory and processor. To that end we develop new device architectures to harness nonlinear switching characteristics of quantum materials for mapping neural network functions onto hardware. We employ 3D heterogeneous integration of new nanodevices for low power edge processing. Our overall goal is to engineer nanomaterials for enhanced AI functionality.

Relevant references:

– Park J, Kumar A, Zhou Y, Oh S, Kim JH, Shi Y, Jain S, Hota G, Nagle AL, Schuman CD, Cauwenberghs G. Multi-level, Forming Free, Bulk Switching Trilayer RRAM for Neuromorphic Computing at the Edge. arXiv preprint arXiv:2310.13844. 2023 Oct 20

-Shi Y, Oh S, Park J, Valle JD, Salev P, Schuller IK, Kuzum D. Integration of Ag-CBRAM crossbars and Mott ReLU neurons for efficient implementation of deep neural networks in hardware. Neuromorphic Computing and Engineering. 2023

-Shi Y, Ananthakrishnan A, Oh S, Liu X, Hota G, Cauwenberghs G, Kuzum D. A neuromorphic brain interface based on RRAM crossbar arrays for high throughput real-time spike sorting. IEEE transactions on electron devices. 69(4):2137-44, 2021.

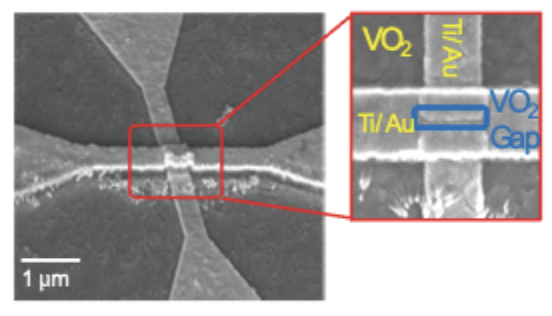

-Oh S, Shi Y, Del Valle J, Salev P, Lu Y, Huang Z, Kalcheim Y, Schuller IK, Kuzum D. Energy-efficient Mott activation neuron for full-hardware implementation of neural networks. Nature nanotechnology. 16(6):680-7, 2021

– Kuzum D, Yu S, Wong HP. Synaptic electronics: materials, devices and applications. Nanotechnology. 24(38):382001, 2013

Keynote presentation “Device Algorithm Codesign for Efficient In Memory Computing” by Duygu Kuzum, University of California San Diego